Welcome to Part 2 of our deep dive into advanced search techniques with PostgreSQL! In Part 1, we explored the foundations of full-text search using PostgreSQL’s native capabilities and integrated them with Next.js and Prisma. Now, we’re taking it up a notch.

In this article, we’ll unlock the power of pgvector, a PostgreSQL extension that enables vector similarity search. We’ll explore how pgvector can revolutionize your search capabilities, allowing for semantic understanding and even geospatial searches. By the end of this tutorial, you’ll be able to implement cutting-edge search features that go beyond simple keyword matching.

Here’s what we’ll cover:

- Understanding pgvector and vector embeddings

- Setting up pgvector in your PostgreSQL database

- Implementing semantic text search with pgvector

- Leveraging pgvector for geospatial searches

- Integrating these advanced search capabilities with Next.js

Let’s dive in and take your search functionality to the next level!

Table of Contents

While PostgreSQL’s built-in full-text search capabilities are powerful, sometimes we need even smarter ways to search through text. That’s where pgvector comes in. It’s an extension for PostgreSQL that adds support for vector similarity search, which is particularly useful for more advanced search scenarios.

What is pgvector?

pgvector is a tool that helps PostgreSQL understand the meaning behind words, not just match them exactly. It turns words and sentences into numbers (called vectors) that capture their meaning, allowing for more intelligent searching.

Why Use pgvector?

Using pgvector offers several benefits over traditional full-text search:

-

Semantic Understanding: Unlike regular text search, which looks for exact matches, pgvector can understand the meaning of words and phrases.

-

Similarity Search: It can find things that are similar in meaning, even if they don’t use the same words. This often leads to better search results.

-

Works in Multiple Languages: With the right setup, pgvector can handle searches across different languages without needing separate configurations for each language.

-

Handles New Words: It can often guess the meaning of words it hasn’t seen before based on the context.

-

Flexible for Different Uses: While PostgreSQL has tools for location-based searches (like PostGIS), pgvector can be more flexible, especially when combining location data with other types of information.

These features make pgvector especially useful for advanced searches, including understanding the meaning behind text (semantic search) and working with location data in complex ways.

Let’s explore how to set up and use pgvector in your own projects to take advantage of these powerful search capabilities.

Understanding Vector Embeddings

Vector embeddings represent words, phrases, or entire documents as vectors of real numbers. These vectors capture semantic meaning, allowing for more nuanced and context-aware searches.

For example, in a good embedding space:

- The vector for “king” - “man” + “woman” might be close to the vector for “queen”.

- The vectors for “dog” and “cat” might be closer to each other than either is to “airplane”.

This allows for semantic searches that understand context and meaning, not just exact word matches.

pgvector is an extension for PostgreSQL that allows for efficient storage and retrieval of vector embeddings, which can be used for advanced text search and similarity comparisons.

Installing pgvector

Before we can use pgvector, we need to install it. The installation process varies depending on your operating system:

Note: Ensure that you have PostgreSQL installed and running before attempting to install pgvector.

For macOS (using Homebrew):

brew install pgvectorFor Linux (Ubuntu/Debian):

sudo apt-get update

sudo apt-get install postgresql-14-pgvectorReplace 14 with your PostgreSQL version number if different.

For Windows:

Windows users typically need to build pgvector from source:

- Download and install PostgreSQL

- Download the pgvector source code from GitHub

- Build the extension using Visual Studio or MinGW

Note: For detailed Windows installation instructions, refer to the pgvector GitHub repository.

After installation, connect to your PostgreSQL database and create the extension:

CREATE EXTENSION IF NOT EXISTS vector;If you encounter any issues, ensure that:

- Your PostgreSQL version is compatible with the installed pgvector version

- The pgvector files are in the correct PostgreSQL extension directory

- You have the necessary permissions to create extensions

Text Search with pgvector

Now that we have pgvector installed, let’s set up our table for vector-based text search. First, we’ll drop the existing articles table if it exists, and then create a new one that includes a column for vector embeddings:

-- Drop the existing table if it exists

DROP TABLE IF EXISTS articles;

-- Create a new table with a vector column

CREATE TABLE articles (

id SERIAL PRIMARY KEY,

title TEXT,

content TEXT,

embedding vector(384) -- 384-dimensional vector for text embedding

);

-- Insert sample data

INSERT INTO articles (title, content) VALUES

('Time Travel Theories', 'Exploring various theories about time travel...'),

('The Future of AI', 'Artificial Intelligence is rapidly evolving...'),

('Space Exploration', 'The latest developments in space exploration...');We’re using a 384-dimensional vector because that’s the output size of the embedding model we’ll be using.

To perform a search, we need to convert our text to vectors. We’ll use the all-MiniLM-L6-v2 model from the sentence-transformers library, which provides a good balance between performance and accuracy for many applications.

It’s important to note that PostgreSQL’s native full-text search doesn’t use a machine learning model. Instead, it uses lexemes and language-specific dictionaries to understand word variations. The all-MiniLM-L6-v2 model we’re using here is a deep learning model that creates dense vector representations of text.

This approach has several advantages over native full-text search:

- Semantic Understanding: It can capture semantic relationships between words, even if they don’t appear together in documents.

- Multilingual Capability: With the right model, it can work across multiple languages without separate configuration.

- Fuzzy Matching: It naturally handles typos and variations in wording.

However, native full-text search still has its place:

- Speed: For exact match queries, native full-text search can be faster.

- Simplicity: It doesn’t require managing and updating embeddings.

- Integration: It’s more tightly integrated with PostgreSQL’s query planner and other features.

In practice, you might use both: native full-text search for straightforward queries and pgvector for more nuanced, semantic searches.

Setting Up the JavaScript Environment for Embeddings

To generate embeddings for our text data, we’ll use the @xenova/transformers package. Here’s how to set it up:

-

Initialize a new Node.js project if you haven’t already:

mkdir pgvector-project cd pgvector-project npm init -y -

Install the required package:

npm install @xenova/transformers -

Modify your

package.jsonto use ES modules by adding the following line:{ ... "type": "module" } -

Create a new file called

embeds.jsand add the following code:import { pipeline } from '@xenova/transformers'; let embeddingModel; async function getEmbedding(text) { if (!embeddingModel) { embeddingModel = await pipeline('feature-extraction', 'Xenova/all-MiniLM-L6-v2'); } const output = await embeddingModel(text, { pooling: 'mean', normalize: true }); return Array.from(output.data); } // Example usage async function main() { const embedding = await getEmbedding('Hello, world!'); console.log(embedding); } main().catch(console.error); -

Run the script:

node embeds.js

This script sets up a function getEmbedding that uses the Xenova/all-MiniLM-L6-v2 model to generate embeddings for input text. The first time you run it, it may take a moment to download the model.

Note: Make sure you’re running this script in the same directory where you installed the

@xenova/transformerspackage.

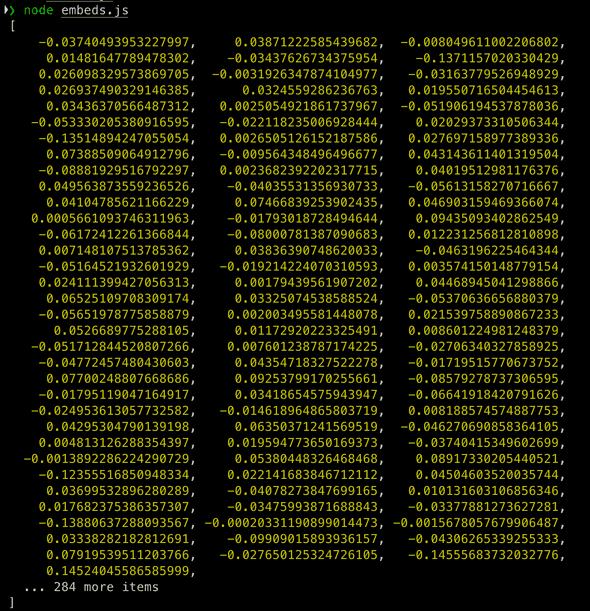

After running the script with node embeds.js, you should see output similar to this:

Figure 1: Full output of the embedding vector (all 384 dimensions)

Figure 1: Full output of the embedding vector (all 384 dimensions)

This output represents the 384-dimensional embedding vector for the text “Hello, world!“. Each number in this vector captures a different aspect of the input text’s meaning in a way that’s useful for machine learning models.

Note: The exact values in your output may differ slightly due to the nature of the model and potential updates, but the structure should be similar.

Next, we’ll use this function to generate embeddings for our article data and store them in our PostgreSQL database.

import pkg from 'pg';

const { Client } = pkg;

import { pipeline } from '@xenova/transformers';

let embeddingModel;

async function getEmbedding(text) {

if (!embeddingModel) {

embeddingModel = await pipeline('feature-extraction', 'Xenova/all-MiniLM-L6-v2');

}

const output = await embeddingModel(text, { pooling: 'mean', normalize: true });

return Array.from(output.data);

}

async function updateArticlesWithEmbeddings() {

const client = new Client({

connectionString: 'postgresql://youruser:yourpassword@localhost:5432/full_text_search_db'

});

await client.connect();

const res = await client.query('SELECT id, title, content FROM articles');

for (const row of res.rows) {

const embedding = await getEmbedding(row.title + ' ' + row.content);

const formattedEmbedding = `[${embedding.join(',')}]`;

await client.query('UPDATE articles SET embedding = $1 WHERE id = $2', [formattedEmbedding, row.id]);

}

await client.end();

}

updateArticlesWithEmbeddings().catch(console.error);To perform a similarity search, you can use a query like this:

import pkg from 'pg';

const { Client } = pkg;

import { pipeline } from '@xenova/transformers';

let embeddingModel;

async function getEmbedding(text) {

if (!embeddingModel) {

embeddingModel = await pipeline('feature-extraction', 'Xenova/all-MiniLM-L6-v2');

}

const output = await embeddingModel(text, { pooling: 'mean', normalize: true });

return Array.from(output.data);

}

async function searchArticles(searchTerm) {

const client = new Client({

connectionString: 'postgresql://youruser:yourpassword@localhost:5432/full_text_search_db'

});

await client.connect();

const queryEmbedding = await getEmbedding(searchTerm);

const formattedEmbedding = `[${embedding.join(',')}]`;

const res = await client.query(`

SELECT title, content

FROM articles

ORDER BY embedding <-> $1

LIMIT 5

`, formattedEmbedding);

await client.end();

return res.rows;

}

// Usage

searchArticles('time travel').then(console.log).catch(console.error);This query will return the top 5 articles most similar to the search term “time travel”.

>> node search-articles.js

[

{

title: 'Time Travel Theories',

content: 'Exploring various theories about time travel...'

},

{

title: 'Space Exploration',

content: 'The latest developments in space exploration...'

},

{

title: 'The Future of AI',

content: 'Artificial Intelligence is rapidly evolving...'

}

]Now that we’ve explored how pgvector can enhance text search capabilities, let’s turn our attention to another powerful application: geospatial search. While pgvector is primarily known for its text search capabilities, its versatility extends to efficient location-based queries as well. This makes pgvector an attractive option for applications that require both semantic text search and geospatial functionality.

Geospatial Search with pgvector

While pgvector is primarily known for its text search capabilities, it can also be effectively used for geospatial searches. This versatility makes pgvector an attractive option for applications that require both semantic text search and location-based queries.

Understanding Geospatial Search with pgvector

In geospatial search, we typically work with latitude and longitude coordinates. pgvector allows us to represent these coordinates as two-dimensional vectors, enabling efficient similarity searches based on geographic proximity.

The key idea is to use the vector distance as a proxy for geographic distance. While this approach doesn’t provide the same level of accuracy as specialized geospatial databases like PostGIS, it offers a simpler and often faster alternative for many use cases, especially when combined with other vector-based features.

Setting Up the Database for Geospatial Search

Let’s set up a table to store locations in London. We’ll use some famous landmarks as our data points.

First, connect to your PostgreSQL database:

psql -d your_database_nameNow, let’s create our table and insert some data:

-- Create the locations table

CREATE TABLE london_landmarks (

id SERIAL PRIMARY KEY,

name TEXT NOT NULL,

coordinates vector(2)

);

-- Insert some famous London landmarks

INSERT INTO london_landmarks (name, coordinates) VALUES

('Big Ben', '[51.500729, -0.124625]'),

('London Eye', '[51.503399, -0.119519]'),

('Tower Bridge', '[51.505456, -0.075356]'),

('Buckingham Palace', '[51.501364, -0.141890]'),

('British Museum', '[51.519459, -0.126931]'),

('St Paul''s Cathedral', '[51.513845, -0.098351]'),

('Trafalgar Square', '[51.508039, -0.128069]'),

('Piccadilly Circus', '[51.510067, -0.134955]'),

('Hyde Park', '[51.507351, -0.165769]'),

('Tate Modern', '[51.507748, -0.099469]');Querying for Nearby Landmarks

Now that we have our data, let’s perform a geospatial search to find the nearest landmarks to a given location. We’ll use the coordinates of Covent Garden as our reference point. We’ll use the coordinates of Covent Garden as our reference point.

In your PostgreSQL session, run the following query:

-- Find the 5 nearest landmarks to Covent Garden

SELECT name, coordinates,

coordinates <-> '[51.512788, -0.123636]'::vector AS distance

FROM london_landmarks

ORDER BY coordinates <-> '[51.512788, -0.123636]'::vector

LIMIT 5;In this query, we introduce the <-> operator, which is key to our geospatial search. This operator calculates the Euclidean distance between two vectors. In our case, it’s measuring the distance between each landmark’s coordinates and the reference point (Covent Garden). The ORDER BY clause uses this operator to sort the results by proximity.

This query should return results similar to:

name | coordinates | distance

-------------------+-----------------------+----------------------

Trafalgar Square | [51.508038,-0.128069] | 0.006496715315365807

British Museum | [51.51946,-0.126931] | 0.007441191364721942

London Eye | [51.5034,-0.119519] | 0.01025103134202598

Piccadilly Circus | [51.510067,-0.134955] | 0.011641202336673885

Big Ben | [51.50073,-0.124625] | 0.01209874823667839Let’s break this down:

- Reference Point: The coordinates

[51.512788, -0.123636]represent Covent Garden. - Distance Unit: The distance values returned by

pgvectorare not in standard units like meters or kilometers. Instead, they represent the Euclidean distance between the vectors in the coordinate space. In this case, since we’re using latitude and longitude directly as vector components, these distances are in degrees.

To convert these to approximate distances in kilometers, you can multiply by 111.32 (the average number of kilometers per degree at the equator). For example:

- Trafalgar Square: 0.006496715315365807 * 111.32 ≈ 0.72 km

- British Museum: 0.007441191364721942 * 111.32 ≈ 0.83 km

- Results Interpretation:

- Trafalgar Square is the closest landmark to Covent Garden, which makes sense as they’re both in central London.

- The British Museum is the second closest, followed by the London Eye, Piccadilly Circus, and Big Ben.

- These results align with the actual geography of central London, demonstrating the effectiveness of pgvector for approximate geospatial queries.

It’s important to note that while this method provides a good approximation for nearby locations, it doesn’t account for the curvature of the Earth. For more precise calculations, especially over larger distances, you might need to use more specialized geospatial functions or databases.

Implementing Geospatial Search in JavaScript

Now that we’ve seen how to perform geospatial searches directly in PostgreSQL, let’s implement this functionality in a Node.js script:

import pkg from 'pg';

const { Client } = pkg;

async function findNearbyLandmarks(lat, lon) {

const client = new Client({

connectionString: 'postgresql://username:password@localhost:5432/your_database_name'

});

await client.connect();

const query = `

SELECT name, coordinates,

coordinates <-> $1::vector AS distance

FROM london_landmarks

ORDER BY coordinates <-> $1::vector

LIMIT 5

`;

const values = [`[${lat}, ${lon}]`];

const res = await client.query(query, values);

await client.end();

return res.rows;

}

// Usage

findNearbyLandmarks(51.512788, -0.123636)

.then(landmarks => {

console.log('Nearby landmarks:');

landmarks.forEach(landmark => {

console.log(`${landmark.name} - Distance: ${landmark.distance}`);

});

})

.catch(console.error);This script defines a findNearbyLandmarks function that takes a latitude and longitude as input and returns the five nearest landmarks from our database.

Integration with Next.js

To integrate this functionality into a Next.js application, you could create an API route that handles the geospatial search:

// pages/api/nearby-landmarks.js

import { findNearbyLandmarks } from '../../lib/geospatial-search';

export default async function handler(req, res) {

const { lat, lon } = req.query;

if (!lat || !lon) {

return res.status(400).json({ error: 'Latitude and longitude are required' });

}

try {

const landmarks = await findNearbyLandmarks(parseFloat(lat), parseFloat(lon));

res.status(200).json(landmarks);

} catch (error) {

console.error(error);

res.status(500).json({ error: 'An error occurred while searching for nearby landmarks' });

}

}Then, in your Next.js pages or components, you can fetch nearby landmarks like this:

async function getNearbyLandmarks(lat, lon) {

const response = await fetch(`/api/nearby-landmarks?lat=${lat}&lon=${lon}`);

const landmarks = await response.json();

// Handle the results (e.g., update state to display them)

}This approach keeps the database queries on the server-side, which is generally more secure and performant.

Limitations and Considerations

While pgvector provides a simple and efficient way to perform geospatial searches, it’s important to note some limitations:

-

Accuracy: The vector distance is an approximation of the actual geographic distance. For more precise calculations, especially over larger distances, specialized geospatial databases like PostGIS might be more appropriate.

-

Scalability: As your dataset grows, you might need to implement additional optimizations or consider using dedicated geospatial indexing solutions.

-

Dimension Limit: pgvector is limited to 3 dimensions, which is sufficient for most geospatial use cases (latitude, longitude, and optionally altitude), but may not be suitable for more complex spatial data.

Despite these limitations, pgvector’s geospatial capabilities are often sufficient for many applications, especially those that require both text and location-based searches. Its simplicity and integration with PostgreSQL make it an attractive option for developers looking to add basic geospatial functionality to their applications.

Comparison of Search Technologies

To better understand where pgvector fits in the landscape of search technologies, let’s compare its capabilities with traditional full-text search and specialized geospatial databases:

| Feature | pgvector | Traditional Full-Text Search | Specialized Geospatial DB (e.g., PostGIS) |

|---|---|---|---|

| Semantic Understanding | Strong | Limited | N/A |

| Exact Keyword Matching | Good | Excellent | N/A |

| Geospatial Search | Good | Limited | Excellent |

| Query Speed | Fast | Very Fast | Very Fast |

| Scalability | Good | Excellent | Excellent |

| Ease of Setup | Moderate | Easy | Complex |

| Multi-modal Search | Possible | Limited | Limited |

This comparison highlights that while pgvector may not be the best in every category, its strength lies in its versatility and ability to handle both semantic and geospatial searches effectively.

Conclusion

Congratulations! You’ve now mastered advanced search techniques using pgvector with PostgreSQL. Let’s recap what we’ve accomplished:

- We’ve explored the power of vector embeddings and how they enable semantic search.

- You’ve learned how to set up and use pgvector in your PostgreSQL database.

- We’ve implemented semantic text search, allowing for more intuitive and context-aware queries.

- You’ve seen how pgvector can be leveraged for efficient geospatial searches.

- We’ve integrated these advanced search capabilities with Next.js, making them ready for real-world applications.

By combining the techniques from Part 1 (native PostgreSQL full-text search) with the advanced capabilities of pgvector we’ve explored here, you now have a powerful toolkit for implementing sophisticated search functionality in your applications.

As you continue to refine your search implementations, consider these next steps:

- Hybrid Search Systems: Combine traditional full-text search with vector similarity for the best of both worlds.

- Fine-tuning Embeddings: Experiment with different embedding models or even fine-tune them on your specific dataset for improved relevance.

- Performance Optimization: Benchmark your search queries and optimize indexes for your specific use cases.

- Advanced ML Integration: Explore clustering or classification techniques to further enhance your search results.

- Scalability Considerations: As your data grows, investigate sharding or distributed search solutions to maintain performance.

Looking to the future, vector databases like pgvector are poised to play an increasingly important role in search technologies. The ability to efficiently handle high-dimensional data opens up possibilities for more nuanced and context-aware search experiences. We can expect to see advancements in areas such as:

- Multi-modal search: Combining text, image, and even audio embeddings for more comprehensive search capabilities.

- Real-time recommendation systems: Leveraging vector similarity for lightning-fast, personalized recommendations.

- Improved natural language understanding: As language models evolve, so too will the quality and utility of text embeddings.

- Edge computing applications: As vector operations become more efficient, we may see more search capabilities pushed to edge devices.

These developments promise to make search not just faster, but smarter and more intuitive. By mastering pgvector now, you’re well-positioned to take advantage of these exciting future developments in search technology.

Remember, while pgvector and PostgreSQL offer powerful search capabilities, very large-scale applications might benefit from dedicated search engines like Elasticsearch or Algolia. However, for most applications, the techniques we’ve covered here will provide excellent performance, flexibility, and semantic understanding.

By mastering these advanced search techniques, you’re well-equipped to create intelligent, efficient, and user-friendly search experiences that will set your applications apart. Keep experimenting and refining your approach, and you’ll be amazed at the search capabilities you can achieve!